Machine Mind, Human Heart

Artificial intelligence can amplify humanity’s worst flaws. Emory is working to make sure AI instead exemplifies our highest virtues.

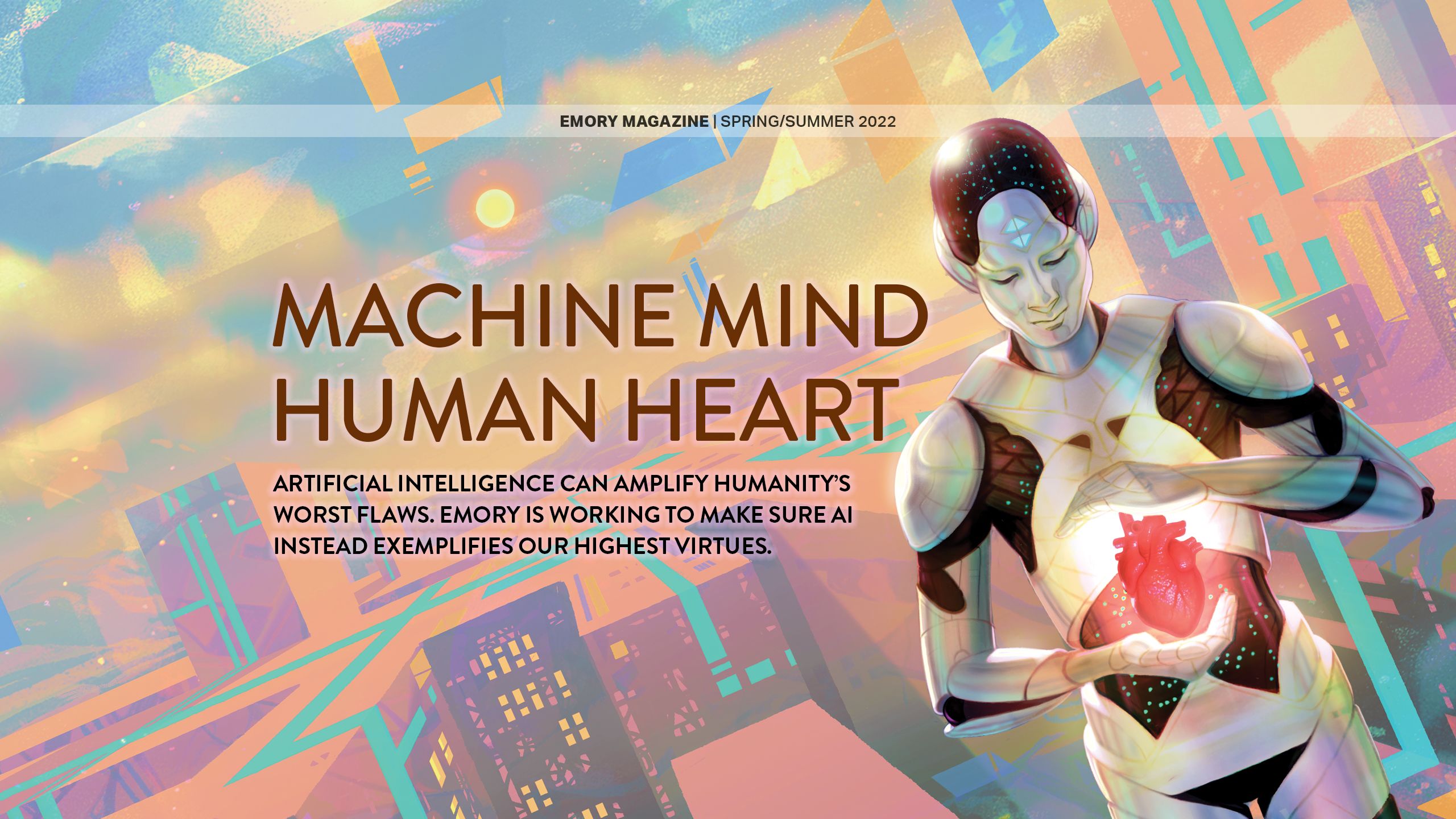

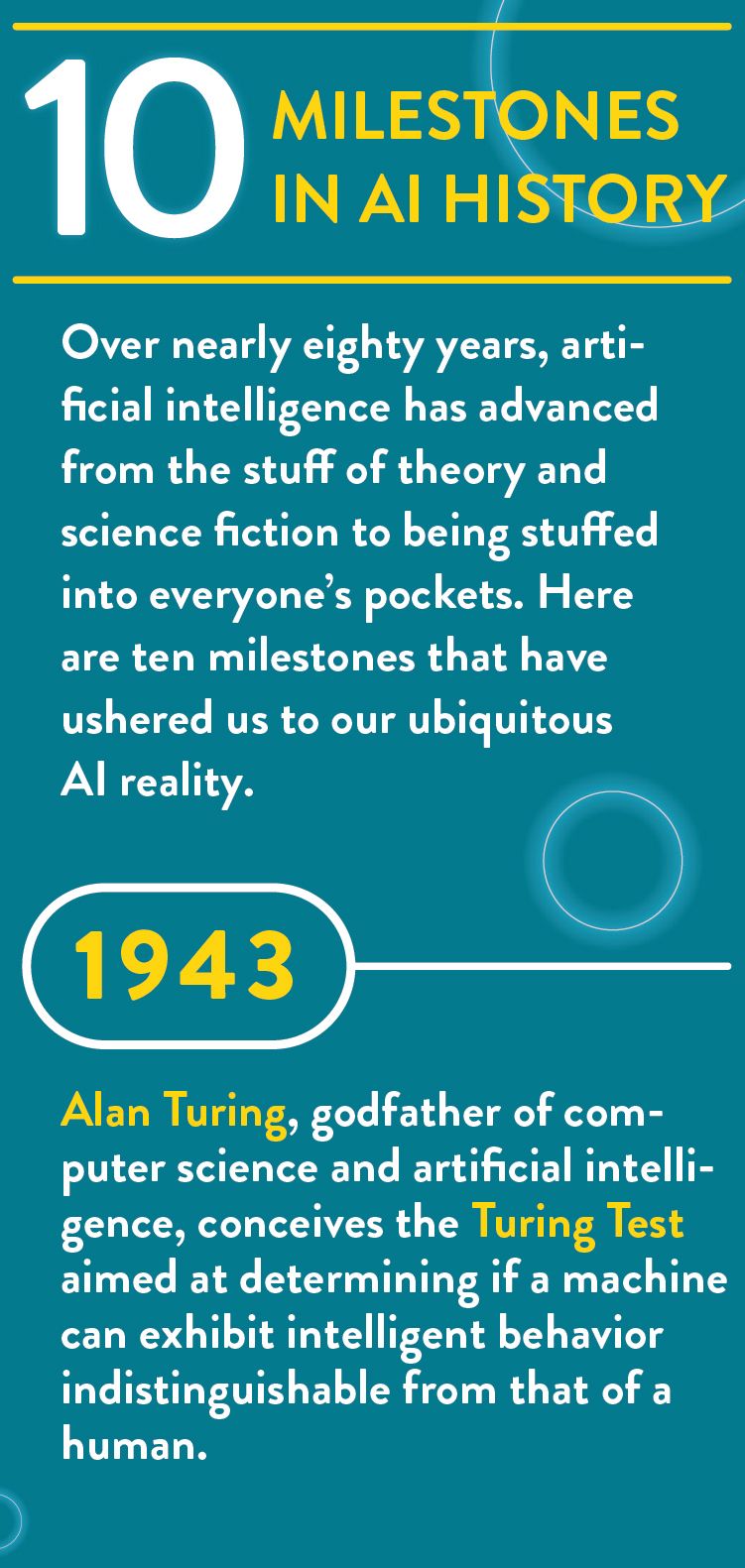

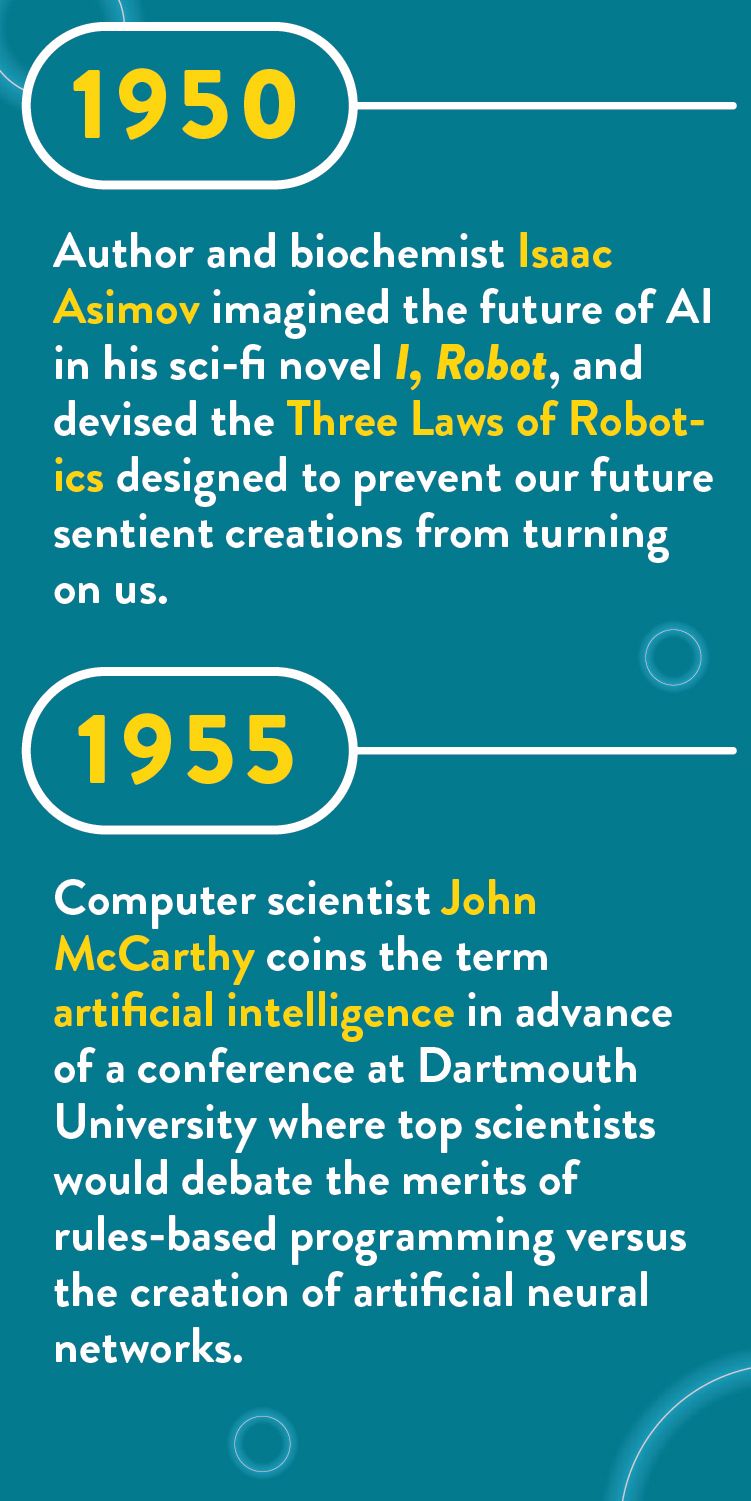

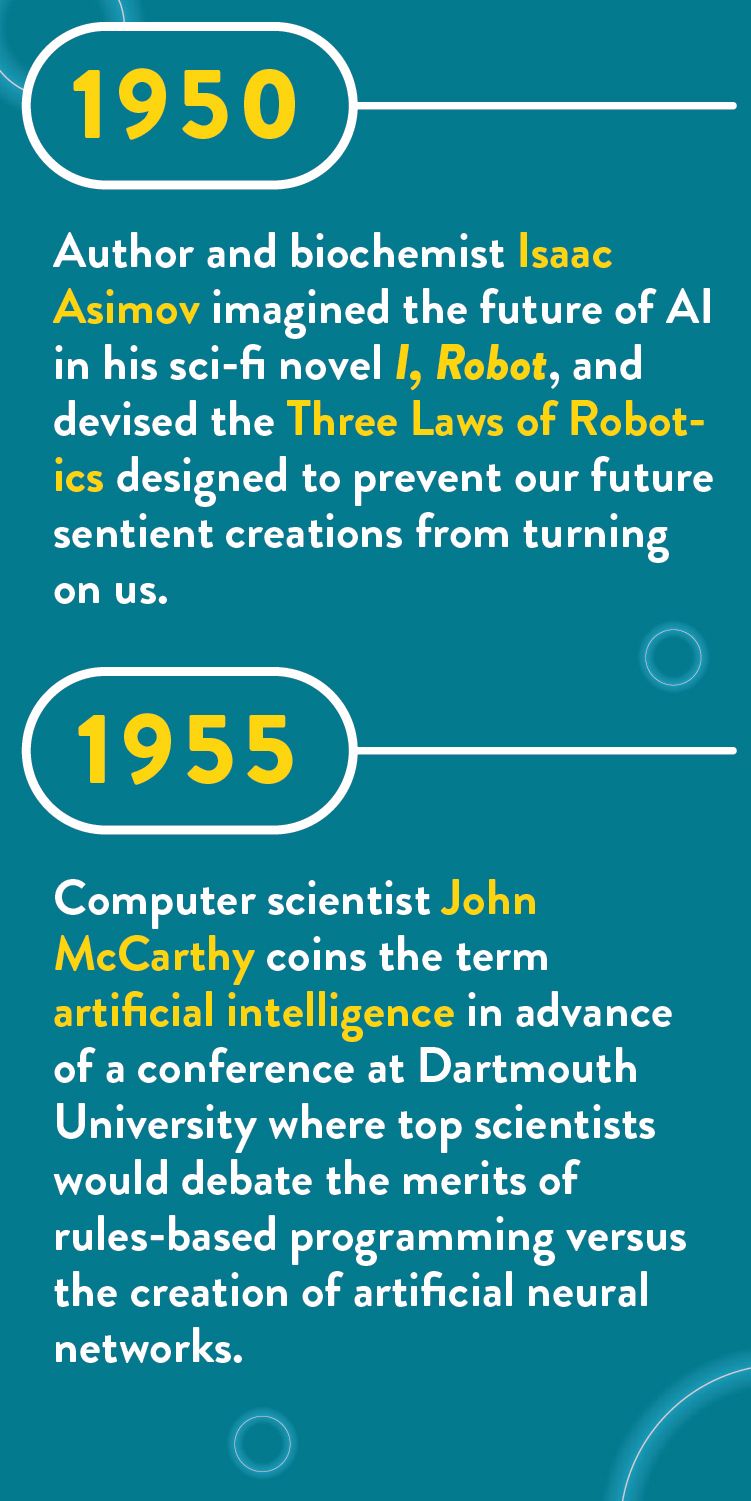

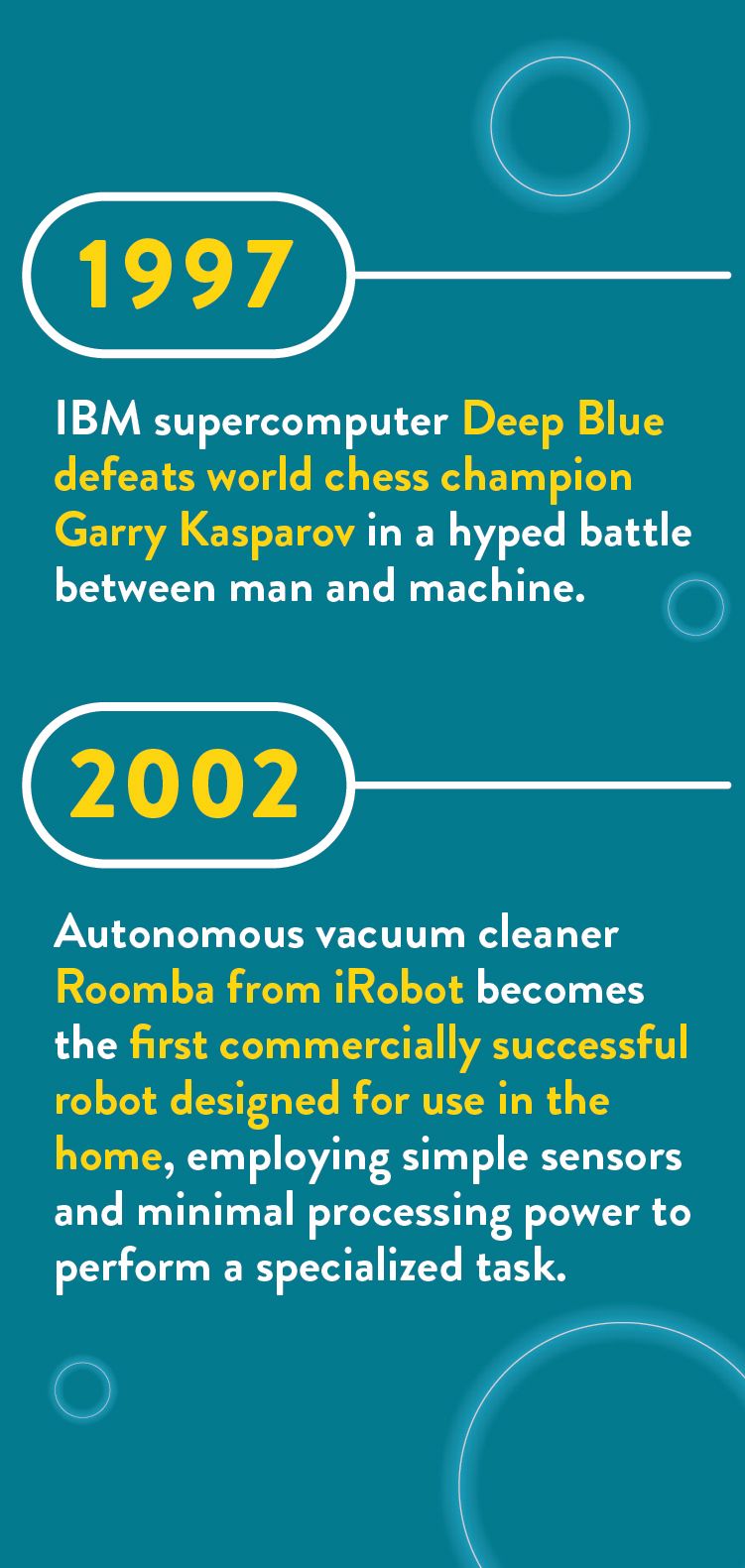

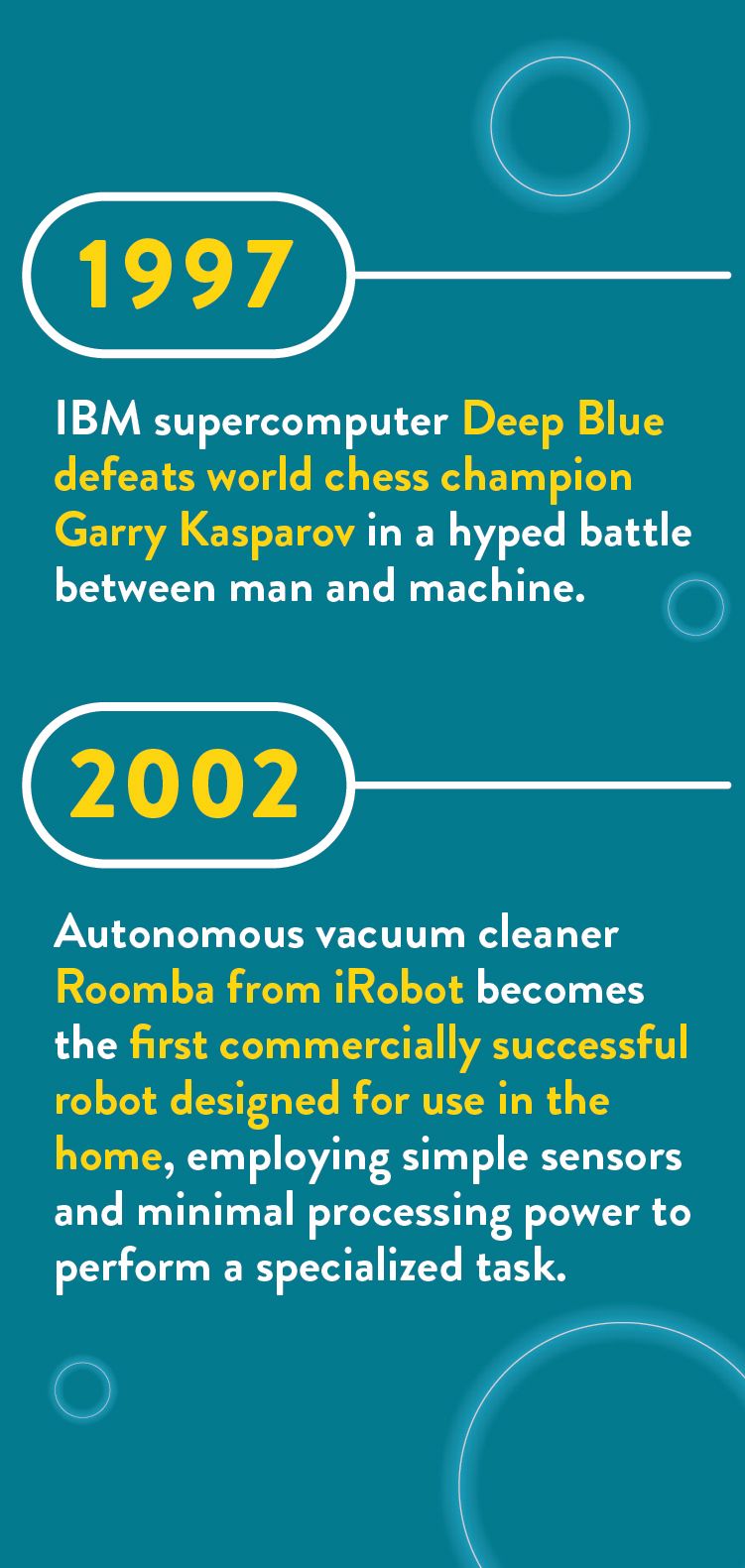

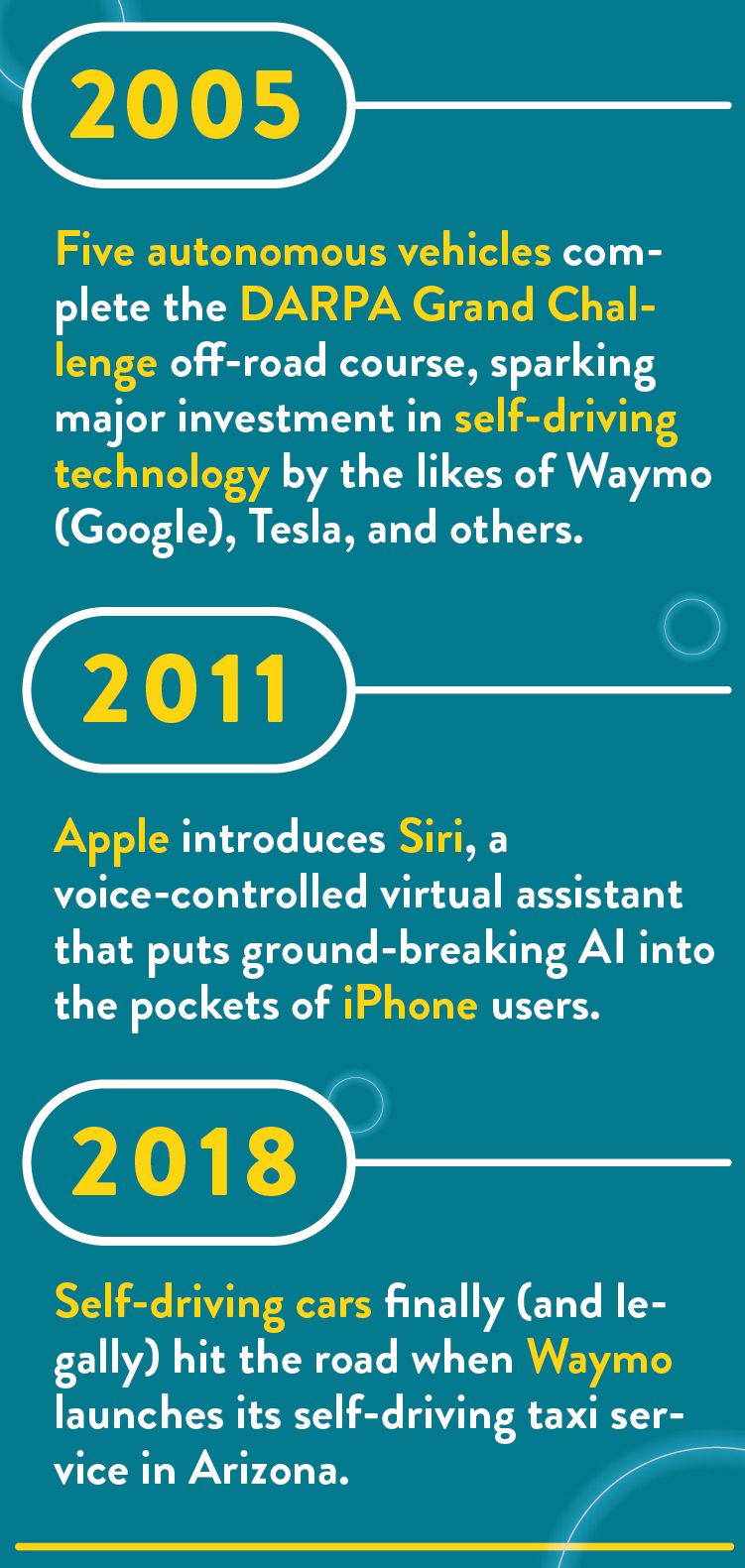

Our fear of artificial intelligence long predates AI’s actual existence. People have a natural apprehension in the face of any technology designed to replace us in some capacity. And as soon as we created the computer—a box of chips and circuits that almost seemed to think on its own (hello, HAL-9000)—the collective countdown to the robot apocalypse has been steadily ticking away.

But while we’ve been bracing to resist our automaton overlords in some winner-take-all technological sci-fi battle, a funny thing happened: The smart machines quietly took over our lives without our really noticing. The invasion didn’t come from the labs of Terminators from Skynet or Agents from The Matrix; it took place in our pockets, in the grocery checkout line, on our roads, in our hospitals, and at the bank.

“We are in the middle of an AI revolution,” says Ravi V. Bellamkonda, Emory University’s provost and executive vice president for academic affairs. “We have a sense of it. But we’re not yet fully comprehending what it’s doing to us.”

Bellamkonda and his colleagues at Emory are among the first in higher education to dedicate themselves, across disciplines, to figuring out precisely the impact the rapid spread of AI is having on us—and how we can better harness its power.

RAVI V. BELLAMKONDA, provost and executive vice president for academic affairs

RAVI V. BELLAMKONDA, provost and executive vice president for academic affairs

For the most part, this technology comes in peace. It exists to help us and make our lives easier, whether it’s ensuring more precise diagnoses of diseases, driving us safely from place to place, monitoring the weather, entertaining us, or connecting us with each other. In fact, the real problem with AI isn’t the technology itself—it’s the human element. Because while true, autonomous artificial intelligence hasn’t been achieved (yet), the models of machine learning that have snuck into every facet of our lives are essentially algorithms created by humans, trained on datasets compiled and curated by humans, employed at the whims of humans, that produce results interpreted by humans. That means the use of AI is rife with human bias, greed, expectation, negligence, and opaqueness, and its output is subject to our reaction.

In fact, the emergence of AI presents an unprecedented test of our ethics and principles as a society. “Ethics is intrinsic to AI,” says Paul Root Wolpe, bioethicist and director of Emory’s Center for Ethics.

“If you think about the ethics of most things, the ethics are in how you use that thing. For instance, the ethics of organ transplantation is in asking ‘Should we perform the procedure?’ or ‘How should we go about it?’ Those are questions for the doctor—the person who develops the technology of organ transplantation may never have to ask that question,” Wolpe says.

“But AI makes decisions and because decisions have ethical implications, you can’t build algorithms without thinking about ethical outcomes.”

Of course, just because the scientists and engineers realize the implications of their creations doesn’t mean they are equipped to make those momentous decisions on their own, especially when some of their models will literally have life or death implications. These algorithms will do things like decide whether a spot on an Xray is a benign growth or a potentially life-threatening tumor, use facial recognition to identify potential suspects in a crime, or use machine learning to determine who should qualify for a mortgage. Is it really better for society to have engineers working for private companies deciding what datasets most accurately represent the population? Is it even fair to place that burden on them? What is the alternative?

The answer might be the very thing we’ve already identified as AI’s key flaw—humanity. If the big-data technology is going to continue to take on more and more responsibility for making decisions in our lives—if AI is truly the cold, calculating brain of the future—then it’s up to us to provide the heart. And Bellamkonda and Wolpe are among the forward-thinking leaders who believe we can do that by incorporating the humanities at every step of the process. One way to accomplish that is with existing ethics infrastructure. Ethics has long been a concern in medical science, for example, and there are many existing bioethics centers that are already handling AI-related questions in medicine.

PAUL ROOT WOLPE, bioethicist and director of Emory’s Center for Ethics

PAUL ROOT WOLPE, bioethicist and director of Emory’s Center for Ethics

At Emory, the Center for Ethics boasts a world-class bioethics program, but also includes ethicists with decades of experience tackling issues that extend far beyond medicine alone, such as business, law, and social justice—all realms that are being impacted by the emergence of machine learning. “I’m a proponent of prophylactic ethics,” says Wolpe. “We need to think about the ethical implications of this AI before we put it in the field. The problem is this isn’t happening through a centralized entity, it’s happening through thousands of start-ups in dozens of industries all over the world. You have too much and too dispersed AI to centralize this thinking.”

Instead, these ethics centers could be used not only as review boards for big AI decisions, but also as training centers for people working in machine learning at research institutions, private companies, and government oversight agencies. “We’ve discussed developing an online certification program in AI ethics,” says John Banja, a professor of rehabilitation medicine and a medical ethicist at Emory. “There are already a lot of folks in the private sector who are concerned about the impacts of AI technologies and don’t want their work to be used in certain ways.”

A more grassroots—and potentially farther-reaching—way to tackle this problem might be to provide these statisticians, computer scientists, and engineers with students, teachers, and researchers across the humanities while they are still in school so they can collaborate in the design of AI moving forward.

At Emory, Provost Bellamkonda has launched a revolutionary initiative called AI.Humanity that seeks to build these partnerships by hiring between sixty and seventy-five new faculty across multiple departments, placing experts in AI and machine learning all over campus, and creating an intertwined community to advance AI-era education and the exchange of ideas.

“Our job as a liberal arts university is to think about what this technology is doing to us,” says Bellamkonda. “We can’t have technologists just say: ‘I created this, I’m not responsible for it.’ This will be a profound change at Emory—an intentional decision to put AI specialists and technologists not in one place, but to embed them across business, chemistry, medicine, and other disciplines, just as you would with any resource.”

While the AI.Humanity initiative has just begun, there are already several projects at Emory that have shown the potential of bringing ethics and a wide range of disciplines to bear when developing, implementing, and responding to AI in different settings.

Let’s look at four Emory examples that might serve as models for conscientious progress as AI and machine learning become even more commonplace in our day-to-day lives. Perhaps putting the human heart in AI will not only lead to a more efficient, equitable, and effective deployment of this technology, but it might also give humanity better insight and more control when the machines really do take over.

For many people who work in the humanities, the advent of the digital age—the continuous integration of computers, internet, and machine learning into their work and research—has been incidental, something they’ve merely had to adapt to. For Emory’s Lauren Klein, it was the realization of her dream job.

Klein grew up a bookworm who was also fascinated with the Macintosh computer her mother had bought for the family. But she spent much of her career searching for a way to combine reading and computers. Then came the advent of digital humanities—the study of the use of computing and digital technologies in the humanities. Specifically, Klein keyed into the intersection of data science and American culture, with a focus on gender and race. She co-wrote a book, Data Feminism (MIT Press, 2020), a groundbreaking look at how intersectional feminism can chart a course to more equitable and ethical data science.

LAUREN KLEIN, associate professor of English and quantitative theory and methods in Emory College of Arts and Sciences

LAUREN KLEIN, associate professor of English and quantitative theory and methods in Emory College of Arts and Sciences

The book also presents examples of how to use the teachings of feminist theory to direct data science toward more equitable outcomes. “In the year 2022, it’s not news that algorithmic systems are biased,” says Klein, now an associate professor in the departments of English and Quantitative Theory and Methods (QTM). “Because they are trained data that comes from the world right now, they cannot help but reflect the biases that exist in the world now: sexism, racism, ableism. But feminism has all sorts of strategies for addressing bias that data scientists can use.”

Klein’s hire between the English department and QTM is an example of the cross-pollination designed to foster thoughtful collaboration of new technologies. “She’s bringing a humanistic critique of the AI space,” says Cliff Carrubba, department chair of QTM at Emory. “A social scientist would call that looking at the mechanism of data collection. Each area has an expertise. Humanists have depth of knowledge of history and origins, and we can merge that expertise with other areas.”

In addition to her own research, which currently includes compiling an interactive history of data visualization from the 1700s to present, a quantitative analysis of abolitionism in the 1800s, and a dive into census numbers that failed to note “invisible labor,” or work that takes place in the home, Klein is also co-teaching a course at Emory called Introduction to Data Justice. The goal is to help students across disciplines come to grips with the concepts of bias, fairness, and discrimination in data science, and how they play out when the datasets are used to train AI.

“It’s a way of thinking historically and contextually about these models in a way that humanists are best trained to do,” says Klein. “It’s a necessary complement to the work of model development, and it’s thrilling to bring these areas together. To me, the most exciting work is interdisciplinary work.”

While AI and machine learning are relatively new to many fields, the idea of computers using data to help us make decisions has been in our hospitals for decades. As a result, once big data and neural networks came around, they were readily adopted into clinical workflow—especially in the realm of radiology.

At first, these algorithms could be relied upon to relieve and double-check the eyesight of radiologists who typically spend eight to ten hours a day staring at images until so benumbed that they’re bound to miss something. Eventually they were used to automate things even well-rested humans aren’t very good at—like measuring whether a tumor has grown, the space between discs in the spine, or the amount of plaque built up in an artery. But eventually, the technology evolved to be able to scan an image and identify, classify, and even predict the outcome of disease. And that’s when the real problems arose.

JUDY GICHOYA, assistant professor of interventional radiology and informatics at Emory School of Medicine

JUDY GICHOYA, assistant professor of interventional radiology and informatics at Emory School of Medicine

For instance, Judy Gichoya, a multidisciplinary researcher in both informatics and interventional radiology, was part of a team that found that AI designed to read medical images like Xrays and CT scans could incidentally also predict the patient’s self-reported race just by looking at the scan, even from corrupted or cropped images. Perhaps even more concerning: Gichoya and her team could not figure out how or why the algorithm could pinpoint the person’s race.

Regardless of why, the results of the study indicate that, if these systems are somehow able to discern a person’s racial background so easily and accurately, these deep learning models they were trained on weren’t deep enough. “We need to better understand the consequences of deploying these systems,” says Gichoya, assistant professor in the Division of Interventional Radiology and Informatics at Emory. “The transparency is missing.”

She is now building a global network of AI researchers across disciplines (doctors, coders, scientists, etc.) who are concerned about bias in these systems and fairness in imaging. The self-described “AI Avengers” span six universities and three continents. Their goal is to build and provide diverse datasets to researchers and companies to better ensure that their systems work for everyone.

Meanwhile, her lab, the Healthcare Innovation and Translational Informatics Lab at Emory, which she co-leads with Hari Trivedi, has just released the EMory BrEast Imaging Dataset (EMBED), a racially diverse granular dataset of 3.5 million screening and diagnostic mammograms. This is one of the most diverse datasets for breast imaging ever compiled, representing 116,000 women divided equally between Black and white, in hopes of creating AI models that will better serve everyone. “People think bias is always a bad thing,” says Gichoya. “It’s not. We just need to understand it and what it means for our patients.”

A NEW VISION FOR AI

Take a closer look at Emory's AI.Humanity Initiative, which aims to make a foundational shift in higher ed across all university disciplines.

There has been much focus on what type of data we train these machines on and how those algorithms work to produce actionable results. But then what? There’s a third part to this human-AI interface that is just as important as the first two—how humans and the larger systems we have in place react to this data.

“Causal mechanisms, the reason things happen, really matter,” says Carrubba. “At Emory, we have a community beyond machine learners from a variety of specializations—from statisticians to econometricians to formal theorists, with interests across the social sciences like law, businesses, and health—who can help us anticipate things like human response.”

RAZIEH NABI, assistant professor of biostatistics and bioinformatics at Rollins School of Public Health

RAZIEH NABI, assistant professor of biostatistics and bioinformatics at Rollins School of Public Health

One such person is Razieh Nabi, assistant professor of biostatistics and bioinformatics at Rollins School of Public Health. Nabi is conducting groundbreaking research in the realm of causal inference as it pertains to AI—identifying the underlying causes of an event or behavior that predictive models fail to account for. These causes can be challenged by factors like missing or censored values, error in measurement, and dependent data.

“Machine learning and prediction models are useful in many settings, but they shouldn’t be naively deployed in critical decision making,” says Nabi. “Take when clinicians need to find the best time to initiate treatment for patients with HIV. An evidence-based answer to this question must take into account the consequences of hypothetical interventions and get rid of spurious correlations between the treatment and outcome of interest, which machine learning algorithms cannot do on their own. Furthermore, sometimes the full benefit of treatments is not realized, since patients often don’t fully adhere to the prescribed treatment plan, due to side effects or disinterest or forgetfulness. Causal inference provides us with the necessary machinery to properly tackle these kinds of challenges.”

Part of Nabi’s research has been motivated by the limitations of the methods proposed. For one example, there’s an emerging field of algorithmic fairness—the aforementioned idea that, despite the illusion that machine learning algorithms are objective, they can actually perpetuate the historical patterns of discrimination and biases reflected in the data, she says.

“In my opinion, AI and humans can complement each other well, but they can also reflect each other’s shortcomings,” Nabi says. “Algorithms rely on humans in every step of their development, from data collection and variable definition to how decisions and findings are placed into practice as policies. If you’re not using the training data carefully, it will be reflected poorly in the consequences.”

Nabi’s work combats these confounding variables by using statistical theory and graphical models to better illustrate the complete picture. “Graphical models tell the investigator what these mechanisms look like,” she says. “It’s a powerful tool when we want to know when and how we identify these confounding quantities.”

Her work is focused on health care, social justice, and public policy. But Nabi’s hope is that researchers will be able to better account for the human element when designing, applying, and interpreting the results of these predictive machine models across all fields.

EMORY'S AI.HUMANITY INITIATIVE

Bringing together the full intellectual power of Emory University to shape the AI revolution to better human health, generate economic value, and promote social justice.

Of course, as machine learning finds its way into these various

fields and daily interactions, there is an overarching concern that goes hand in hand with the ethical considerations—how our use of AI impacts the law.

Kristin Johnson is the Asa Griggs Candler Professor of Law at Emory’s School of Law. She is internationally known for her research focusing on digital assets and AI used in commercial transactions, and she has co-authored a forthcoming book about the ethical implications of machine learning and its place in a just society. “We’re looking at a number of ways that AI is altering how we apply and understand the law,” says Johnson. “It’s having a profound effect on our understanding of our Constitutional rights, competition law, and human rights.”

KRISTIN JOHNSON, Asa Griggs Candler Professor of Law at Emory School of Law

KRISTIN JOHNSON, Asa Griggs Candler Professor of Law at Emory School of Law

Johnson points out that, like most other professions and institutions, the justice system is experiencing a direct impact from this technology. This includes things like the use of predictive models to determine bail assessment, potential recidivism,

and eligibility for social benefits.

There are also the larger concerns of peoples’ rights when it comes to use of machine learning, particularly in regards to privacy when collecting the data on which these predictive models are trained. “We need to ensure this technology is adopted and applied in a way that is consistent with constitutional norms and protects the values those laws are intended to preserve,” she says.

But whether it’s our rights to protect our personal information or trying to negotiate a plea deal with a robot lawyer, Johnson echoes the concerns of her colleagues at Emory: Don’t forget the importance of the human element in the face of tranformational technological change.

“AI is limited in its ability to provide legal services,” she says, “because there remains a necessity for empathy and understanding that currently only humans bring to the profession.”

Story by Tony Rehagen. Illustration by Charles Chaisson. Photography by Stephen Nowland. Design by Elizabeth Hautau Karp.

Want to know more?

Please visit Emory Magazine, Emory News Center, and Emory University